In late November the U.S. Federal Drug Administration approved Benevolent AI’s recommended arthritis drug Baricitnib as a COVID-19 treatment, just nine-months after the hypothesis was developed. The correlation between the properties of this existing Eli Lilly drug and a potential treatment for seriously ill COVID-19 patients, was made with the help of knowledge graphs, which represent data in context, in a manner that humans and machines can readily understand.

Knowledge graphs apply semantics to give context and relationships to data, providing a framework for data integration, unification, analytics and sharing. Think of them as a flexible means of discovering facts and relationships between people, processes, applications and data, in ways that give companies new insights into their businesses, create new services and improve R&D research.

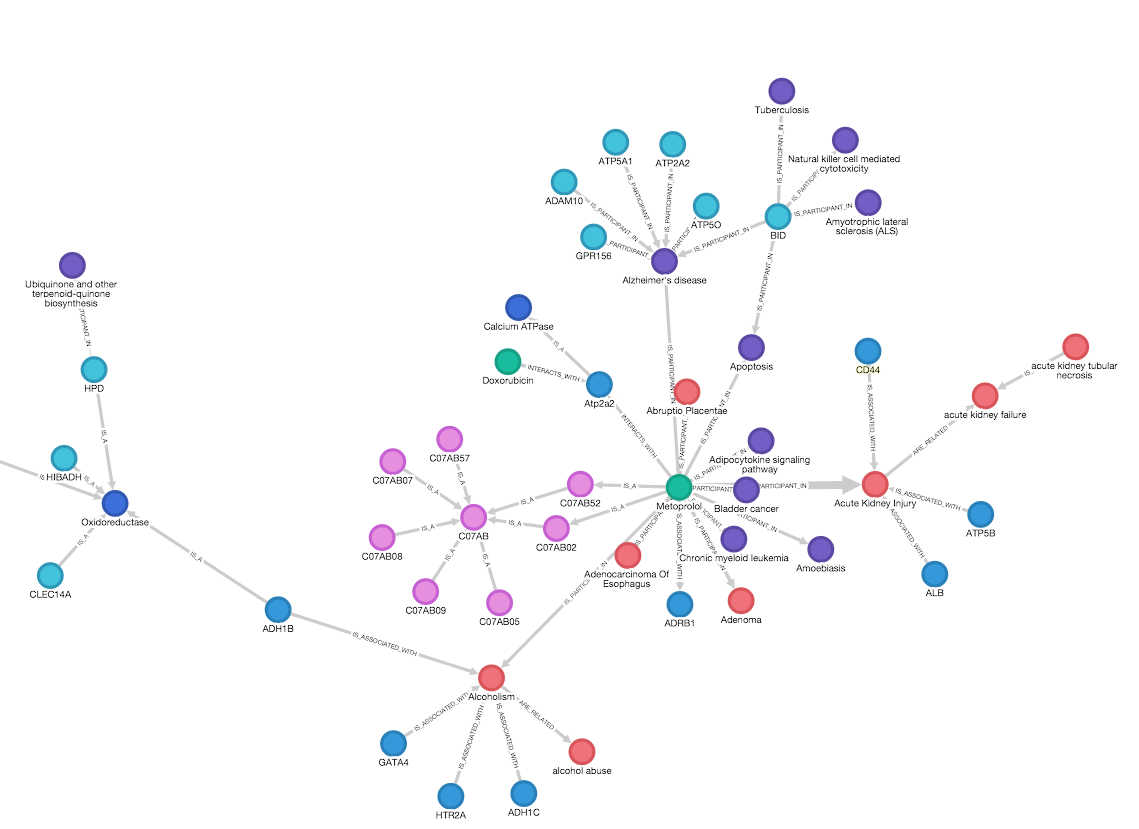

Benevolent AI, a six-year-old London-based company which has developed a platform of computational and experimental technologies and processes that can draw on vast quantities of biomedical data to advance drug development, built-in the use of knowledge graphs from day one. “In the human genome there are about 20,000 genes with a much smaller number of approved drugs. The number of entities out there isn’t very large but the number of connections between them ranks in the hundreds of millions and that is only scratching the surface of what’s known,” says Olly Oechsle, a senior software engineer at Benevolent AI. “Having a graph system that is able to help you navigate between all of those connections is vital.”

Oechsle was one of seven panelists who participated in a December 7 roundtable discussion on the power of graphs organized by DataSeries, a global network of data leaders led by venture capital firm OpenOcean. and moderated by Innovator Editor-in-Chief Jennifer L. Schenker. Until recently knowledge graphs were mainly leveraged by young companies like Benevolent AI or tech companies like Google, Facebook, LinkedIn and Amazon. But large corporates in traditional sectors such as chemicals and finance are starting to discover the power of graphs and, as their number grows, it is expected to cause a paradigm shift in data management. What’s more, as graphs progress they could potentially lead to more robust and reliable AI systems.

Knowledge graphs are a game changer that help companies move away from relational databases and leverage the power of natural language processing, semantic understanding and machine learning to better leverage their data, says panelist Michael Atkin, a principal at agnos.ai, a specialist consultancy that designs and implements enterprise knowledge graphs. The advantages to business are clear.

Graphs “are a prerequisite for achieving smart, semantic AI-powered applications that can help you discover facts from your content, data and organizational knowledge which would otherwise go unnoticed,” says Atkin, who is also director of of the Enterprise Knowledge Graph Foundation. They help corporates organize the information from disparate data sources to facilitate intelligent search. They make data understandable in business terms rather than in symbols only understood by a handful of specialized personnel. And they speed digital transformation by delivering a “digital twin” of a company that encompasses all data points as well as the relationships between data elements. “By fundamentally understanding the way all data relates throughout the organization, graphs offer an added dimension of context which informs everything from initial data discovery to flexible analytics,” he says. “Graphs give corporates the ability to ask business questions and get business answers and value. These developments promise to enhance productivity and usher in a new era of business opportunity.”

Breaking Data Silos

Today, the infrastructure for managing data in most major corporations is based on decades old technology. Line of business and functional silos are everywhere. They are exacerbated by relational database management systems based on physical data elements that are stored as columns in tables and constrained by the lack of data standards. “Data meaning is tied to proprietary data models and managed independently,” explains Atkin. These data silos, when combined with external models for glossaries, entity relationship diagrams, databases and metadata repositories, lead to incongruent data and, due to the explosion of uniquely labeled elements, it is nearly impossible to align these silos. As a result, corporates end up with “data that is hard to access, blend, analyze and use, impeding application development, data science, analytics, process automation, reporting and compliance,” he says.

Advantages of Semantic Technology

Semantic technology – which uses formal semantics to help AI systems understand language and process information the way humans do, is seen as the best way to handle large volumes of data in multiple forms. It was designed specifically for interconnected data and is good at unraveling complex relationships. Semantic processing (which is now a World Wide Web Consortium standard) was a huge breakthrough for content management, says Atkin. And because it is an open standard it has propelled lots of companies into the world of knowledge management. It is the backbone of the Semantic Web. It is the infrastructure for bio-medical engineering in areas such as cancer research and for the human genome project. And, it is the basis for what Google and other tech companies are doing with knowledge graphs and graph neural networks (GNNs), a type of neural network which learns directly from the graph structure, helping make recommendations for applications like search, e-commerce and computer vision.

A shift away from conventional relational databases to knowledge graphs gives corporates the opportunity to reap some of the same advantages: capturing the meaning of data as well as how concepts are connected. Semantic modeling eliminates the problem of hard-coded assumptions because it focuses on concepts, not specific data formats. Users automatically understand what the data represents even when it moves across organizational boundaries, allowing efficient reuse across systems and processes. “Instead of data silos we get data that is integrated and linked, and organizations become more efficient because ontologies are standardized and reusable,” says Atkin. This allows for economies of scale and, he says, “moving from data issues to data use cases.”

How Businesses Are Using Knowledge Graphs

Some corporates, like German multinational chemical company BASF, the largest chemical producer in the world, are already reaping the benefits. BASF is applying knowledge graphs to help the company digitalize existing knowledge and help with future research and development, says panelist Juergen Mueller, BASF’s Head of Knowledge Architecture. A large-scale knowledge graph powers the company’s internal knowledge system for R&D. It combines ontologies and natural language processing applied to more than 200 million scientific, technical documents. In addition, his team is applying knowledge graphs to aggregate data and information for project specific applications in R&D and business. “We do our own research and development to bring together explicit semantic expression with graph algorithms and graph neural networks,” says Mueller. “The goal is to create a seamless interaction between humans and machines in building and using knowledge in digital form to answer research and business questions.” One core field of focus is to figure out how to deal with ambiguity and uncertainty beyond just representing factual knowledge. “We want to represent the process of elaboration of early ideas and hypotheses to falsification or verification over time,” he says. “This would enable us to answer questions like ‘what was known at that point?’, ‘why did we come to that conclusion?’, or ‘what decisions do we need to review given new information?’ Knowledge graphs allow us to deal with high complexity that is not doable in other ways,” he says.” We need technology that’s really capable to scale into billions or even trillions of relationships.“

Graphs are already playing an important role in drug discovery and promise to do even more. As graphs progress, Benevolent AI’sOechsle says he is looking forward to the time when all companies will be able to take their data and securely combine it with the knowledge of the world, in order to be able to answer things that are beyond the company’s internal knowledge.

The world’s biggest banks are all experimenting with knowledge graphs, in part because they were mandated to fix their data problems after the 2008 financial crisis, says Atkin. There are two main objectives. One involves control functions such as inventory management, employee connection, data processes, governance, quality requirements and organizational functions to ensure operational resilience, he says. The other is focused on business value proposition objectives such as aggregating things in flexible ways so that banks can look at concepts from various perspectives. This includes product developments, consumer behavior and how they can service their customers in a better way. “The whole point is to be able to customize and personalize your data,” says Atkin. “The underlying drivers of the moment are the infrastructure control processes but the value proposition is personalization. Being able to make that connection between the two is hard, because you need an investment to drive this further.”

That said, “competition is shifting from data being a competitive advantage to companies being at a competitive disadvantage if they don’t use knowledge graphs,” says Atkin. The mentality of ‘are we being left behind?’ has been injected in many corporations and that’s great to see.”

It is hard to predict when – or if – knowledge graphs will become the core of most large corporates data management strategies, says panelist Francois Scharffe, co-founder of the Knowledge Graph Conference, a global conference on knowledge graphs. “But we are already seeing a lot of interest in this space and an increasing excitement, which means that it is definitely closer to short/mid-term rather than a long-term timeline.”

Making The Leap From Columns To Context

Today making the leap from columns to context is achievable but not automatic. Most of the enterprise data is stored in a tables-columns format and it is accessed using the highly common but formal SQL language, while knowledge graphs’ data is stored in a a format with more emphasis on connections, that is slightly less strict. This means that in order to benefit from knowledge graphs, organizations must invest in new infrastructure, data must be transformed and new skills must be learned, says panelist Amit Weitzner, one of three brothers who co-founded Timbr.ai, an Israeli startup that is helping governments and large corporates enhance their existing data management technologies with knowledge graph capabilities. (The company’s name, is short for Tim Berners Lee who, together with timbr.ai’s advisor Jim Hendler, pioneered the semantic Web).

To make it easier, Timbr implemented the semantic Web standards developed originally for the Internet, in SQL, to make it possible for enterprises to modernize and enhance existing data technologies with knowledge graph capabilities. Timbr’s knowledge graph acts as a virtual layer that maps data from companies’ existing relational databases.

Knowledge graphs are a key part of a larger shift towards a data-centric enterprise, so it must also involve rethinking the software architecture and making it more data-driven and declarative, says panelist Martynas Jusevičius, co-founder of AtomGraph, a company which tries to solve that issue with knowledge graph management solutions and open-source software for publishing and managing connected data.

While various solutions exist for placing virtual knowledge graphs on top of legacy data management systems, what is missing are user-friendly tools that help non-techies within the business leverage the knowledge graphs, says Jusevičius. “Software design needs to be rethought. If you use knowledge graphs, then your software has to be designed in such a way so that you can truly use all the potential functions that a graph can give you.”

Technical And Organizational Challenges

While a host of young companies are working on solutions to make it easier for corporates to implement knowledge graphs “we are not at a level that relationship databases have reached where you can confidently place bets with regards to technology choices,” says BASF’s Mueller.

Another barrier is that knowledge graphs are still expensive to implement as there is a need for both data engineers and knowledge engineers that bring domain and ontology modeling expertise, says Scharffe.

Figuring out how to get domain experts to express their knowledge in a digital format is critical. “Making implicit expert knowledge explicit in a systematic way for use by humans and machines is essential to drive future innovation” says BASF’s Mueller. “Just writing down findings in documents to be read by human experts is not sufficient.” Very costly natural language processing (NLP) techniques are required to reconstruct structure from such unstructured data into something machine processable or even searchable at scale. “Expressing knowledge through the construction of comprehensive ontologies has a steep learning curve for domain experts not familiar with semantic Web technologies,’ he says. “Therefore, we need new approaches and tools to make the capture and leverage of knowledge in digital form as easy, efficient, and fast as possible.”

Perhaps the greatest challenge is a necessary mind-set shift by top executives at traditional companies. “The right leadership is needed to facilitate innovation and give strategic support for the required changes that accompany knowledge graphs,” says Atkin.

It is important, also, for corporates to set expectations. “People want short term wins but don’t understand that it takes time to get to the true wins,” says Timbr.ai’s Weitzner. “If there is no fast return on investment people tend to give up. If you can shorten this time and build a knowledge graph that will give you value in a certain function, then you will be able to reap value much faster and also foster a shift in mindset.” He recommends starting with one use case and setting clear KPIs. “The beauty of knowledge graphs is you can implement new use cases and onboard other data sources too,” he says. “Start with one pain point and solve a problem, even if it’s minor.”

Leveraging The Marriage Of Graphs And Machine Learning

Once knowledge graphs become widely used, they will not only radically change the way data is managed they promise to also impact machine learning and AI training, helping to guide and speed up the learning process for machines, says Scharffe. “A ML training process is reviving every possible data experience in order to generalize. If there is a graph in place that acts as a knowledgeable instructor then you will be able to create general rules,” he says. “We are not there yet but knowledge graphs are introducing this, so using a knowledge graph as a guide and instructor for a ML learning process is what the future might look like.”

While corporations grapple with implementing graphs, tech companies are already embedding even more sophisticated functionality in the form of GNNs, a type of neural network which learns directly from the graph structure.

BenevolentAI uses GNNs. “ It is our bread-and-butter for finding new ways in which diseases are founded,” says Oechsle. DeepMind, the UK-based AI company owned by Alphabet, and other cutting-edge companies working on some of the most important questions in science, are also using GNNs to solve complex issues. DeepMind recently announced it can predict the structure of proteins, a breakthrough that could dramatically speed up the discovery of new drugs. Some of the models incorporated in solving protein folding – a problem that stumped scientists for 50 years – were solved by directly reasoning about the spatial graph of the folded protein, according to the DeepMind blogpost. GNNs are also playing an important role in drug discovery. “Based on a recent highly influential study from MIT you can ask whether a molecule inhibits a particular strain of [an infection] and predict yes or no and then once you have a model trained like this you can apply it to new molecules you have never seen before and still predict with a high probability the top 100 that will have the highest probability of working and send them to chemists for further study,” says Petar Velickovic, a senior research scientist at DeepMind. Velickovic directly worked on deploying GNNs to help Google Maps to more accurately predict travel times. Meanwhile, some of the world’s large e-commerce companies are using them to suggest diversified complimentary purchases. Social networks use them to create friend graphs while Pinterest, an image sharing and social media service, is using them to suggest additional relevant content to its users, says panelist Will Hamilton, who aided Pinterest with developing its GNN technology.

For a long time there has been a divide between ML & AI techniques under development, explains Hamilton, an assistant professor of computer technology at McGill Univeristy and chair of CIFAR, a Canadian-based global research organization.“On one side we had these continuous signal processing things like convolutional neural networks, techniques, and mathematics coming from a perspective of how can we transform this signal and do continuous pattern recognition and how can we learn deep representation. On the other side, we have long history of logic AI with expert systems using the idea of symbolic representation.” Knowledge Graphs are the convergence of these two sides. “We’re actually starting to build GNNs which have deep mathematical relationships to signal processing and convolutions,” says Hamilton. “They are being trained and optimized and used to actually simulate logical observations – to do things like query and logical problem solving on top of the knowledge graph structure. This is very exciting as this the first time that we know of, where we had this successful merger of these two different streams of thoughts on a deep theoretical level. “

This theoretical bridge has the potential to really impact real world applications by making modern AI systems more reliable and robust, says Hamilton. For example, it is critical that interactive dialogue systems do not state false facts or use harmful language, he says. It is difficult to ensure this kind of robustness is purely statistical deep learning models, since they are inherently unpredictable. Merging the power of deep learning techniques with the robustness and systematicity of logical rule-based methods offers one major avenue to address these concerns.

“If we can bridge this divide, then we can stop having to trade-off between high performing, not very robust deep learning techniques, versus very stable, but brittle, easy to predict rule-based systems,” says Hamilton. “The real opportunity is combining these two worlds and harvesting the best of both.”

To access more of The Innovator’s Deep Dive articles click here.